You might not have heard much about Google's Imagen generative AI, with most of the news around this technology over the years revolving around MidJourney, DALL-E and now GPT-4o.

Imagen 4 Is Not a Revolution

If you watch the Google I/O 2025 video starting at the 1:19:00 mark, you'll see the main notes for Imagen 4, Google's latest and greatest image generation technology.

This doesn't seem to be about bringing entirely new capabilities or massive leaps in quality. Instead, Imagen 4 is about increases in image richness, fine-grained details, and higher resolution. While the presenter calls it a "big leap forward", it's clearly more an improvement on what Imagen 3 could already do, rather than a revolutionary new model.

ChatGPT's New Image Generation Feels Like Dial-Up All Over Again

Watching my images appear slowly from the top down takes me back to the good old days.

Accuracy and Predictability Matter More Now

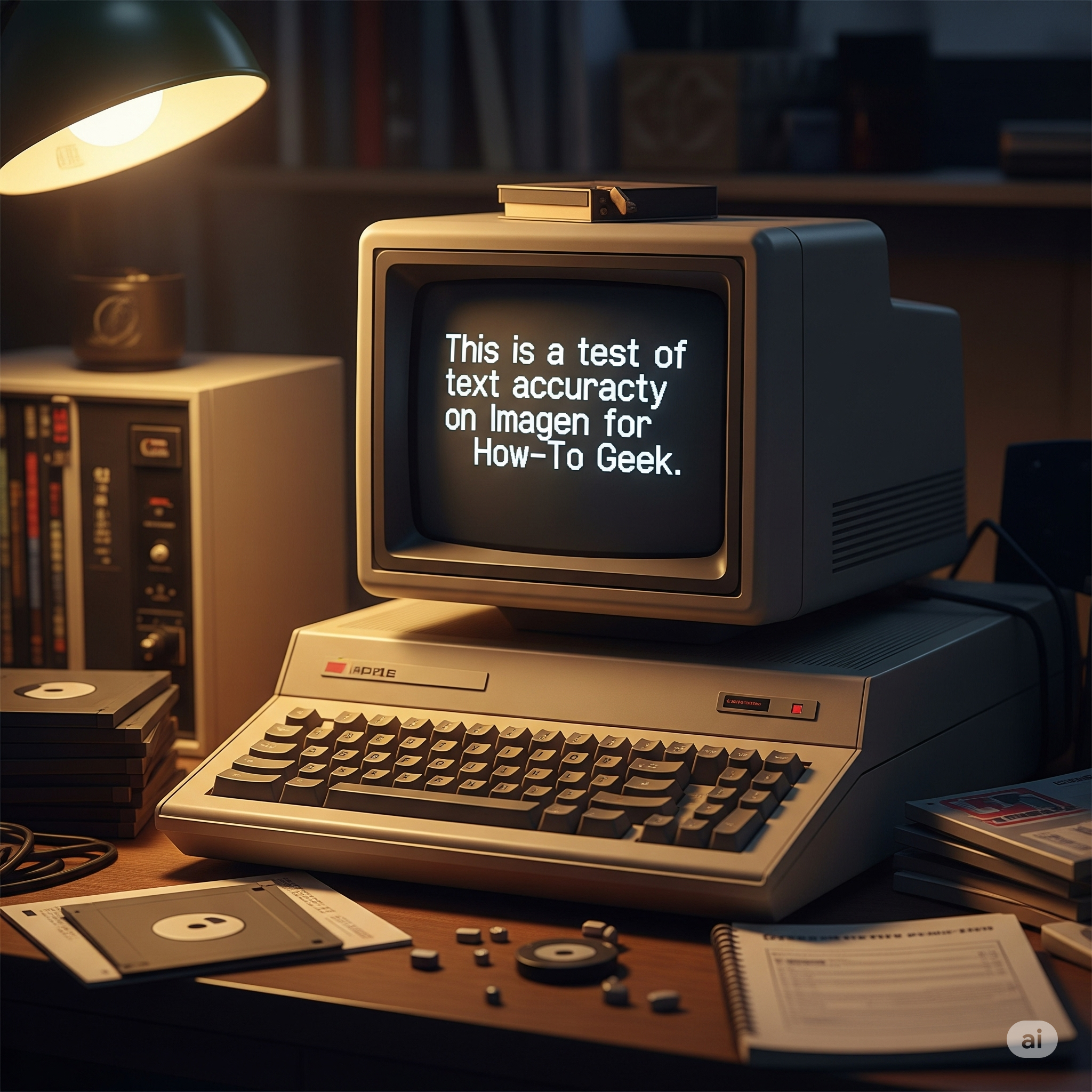

Apart from improving the core performance and functionality of Imagen, the core theme here is mainly about improved control and adherence. Imagen 4 will stick much more closely to your prompt, and won't make mistakes with elements like text, or specific eye colors, and so on.

This is important, because while it's cool that you can create beautiful images by throwing prompts into tools like MidJourney, if you ever want to use AI image generation as a serious tool, it needs to have predictable and controllable results. Based on my own playing around with the light and fast version of Imagen 4 that comes with my Google AI subscription, it seems the biggest advancements are indeed accuracy and control.

I think we can largely consider the issue of garbled text in AI images solved, both here with Imagen 4 and with other major image generation systems. Not only is it solved, but the model will even have a consistent layout and make suitable font choices. Just look at this birthday poster I asked for.

The images above are labeled as Imagen 4 images by Gemini, but Imagen 4 images supposedly do not have the visible watermark. This is still part of the free preview for Google AI subscribers, and the results are in line with what Imagen 4 promises with its light and fast model, but I thought it's worth mentioning.

ChatGPT Can Finally Generate Images With Legible Text

The new GPT-4o image generator is a huge step up from DALL-E 3.

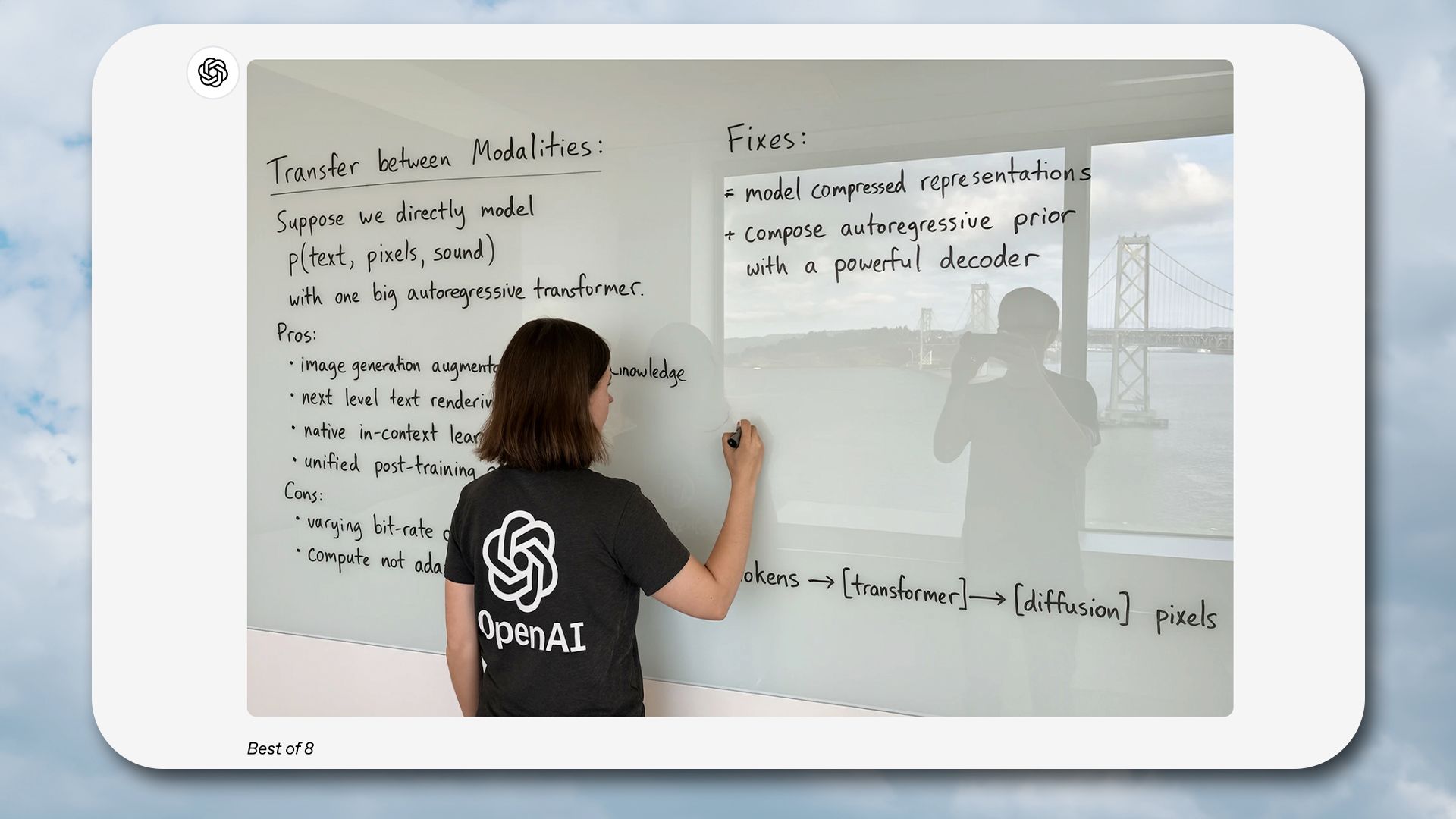

This Is the Final Phase Of Mainstreaming AI Image Generation

By and large, to me, it seems like all the major work is done when it comes to AI image generation. It happened so quickly, and in such big leaps, that it boggles the mind. However, all that's really left now is polish, polish, and more polish.

With the ability to do detailed edits of existing images you've generated, and precise control of what appears in the image, where it appears, and what it should look like, I think we are almost there for image generation to move out of the experimental phase as a beta feature in applications like Photoshop.

The Next Step Is Efficiency

One area that will always need improvement is cost and speed. You may have read how generative AI uses plenty of electricity, and is actually quite expensive. This has environmental concerns, and, of course, that computation power could have been used for something less trivial. However, the cost of generating images is going down, and the speed is going up.

This is happening for a few reasons, the most obvious of which is that the models themselves are becoming smaller and more efficient. Also, specialized AI hardware is now more common in data centers, and these chips can do the necessary math more quickly, and with less power. Finally, all computation is getting cheaper still, as long as microchips are improving.

In the case of Imagen, Google claims that Imagen 4 images cost four cents to generate, which is 25% more than Imagen 3, but since the new model is so much faster and offers more quality, it is in fact cheaper when you factor that all in.

Going ahead, I see most of the effort now being put into making image generation cheap, both in terms of money and in terms of power. What you really want is to have this level of quality and speed using local models and running on the hardware you have with you, not somewhere in the cloud.